1.2 | Setting up a Pixotope machine

Video tutorial - Director walkthrough - SETUP (Pixotope 1.1).

Planning a multi-camera or multi-machine production?

Learn more about 1.2 | Single- and multi-machine setups.

Starting Director

- If this is the first time you have started the Director, please refer to Start Director

- Log in using a Live license

- Check to make sure you are in SETUP view (you can see from the drop-down in the top left-hand corner)

Learn more about the different views in Director: 1.2 | Adjusting levels from Director.

Creating a project

- Click "Create a new project"

- Name your project

- Only alphanumeric characters are allowed, and it is recommended that the name be no longer than 20 characters (Unreal engine restriction)

- Set your project location

- Add it to the project list

- Set it as the current project

All changes under SETUP are saved under the current project. Setting another project to be the current project will load its setup instead.

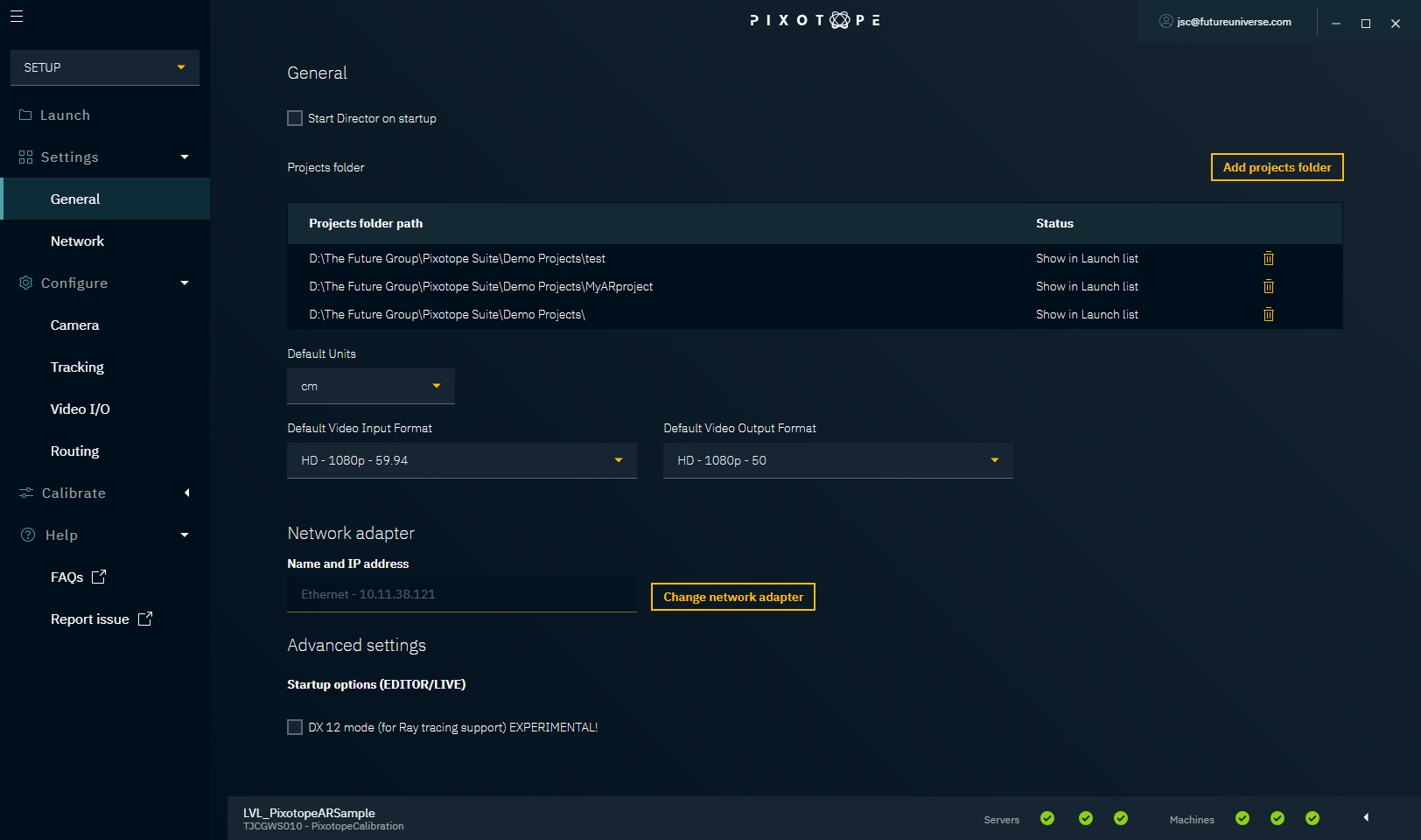

Settings

Under the "Settings" item on the main menu, there are 2 pages: "General" and "Network".

General

This page allows you to change the default settings to suit your production pipeline. These are general settings related to this installation of Director.

View settings can be found in the Windows menu, in the top left-hand corner.

Start Director on startup

This option starts Director automatically when you start up your machine.

Projects folder

This shows a list of projects folder paths on your local machine that are being "watched". All projects found are listed on the "Launch" page. This includes all projects in any subfolder. The demo projects folder path, chosen in the installation process, is listed by default.

Adding a project, level or control panel in one of the listed projects folder paths will automatically add them to the "Launch" page.

In multi-machine setups, the current projects folder must have the same local path on all machines.

Add projects folder

You can select a new projects folder path to be watched. All projects found in that folder or its subfolders will be added to the "Launch" page.

Adding a root folder such as C:\ is discouraged.

Remove a projects folder path

Clicking the trash can will remove that projects folder path. All projects within this parent path will be removed from the "Launch" page, unless there is an overlapping parent path.

Adding subfolders that overlap with other projects folder paths can be used to remove parent folders without removing the subfolders.

Default settings

Default Units

Choose the preferred units for the Unreal Engine and the Camera Tracking Server.

Default Video Input and Output Format

Choose the default video format to be used in Configure → Video I/O.

Network adapter

Sets the network adapter to be used for communication between Pixotope machines.

Advanced settings

Startup options (Editor/LIVE)

DX 12 mode

This starts the Editor/Engine with the DX 12 flag. To fully enable ray tracing, ensure that:

- Windows is running version 1809 or later

- DirectX 12 is installed

- the latest Nvidia RTX Graphic drivers are installed

- Ray Tracing is turned on in Pixotope Editor Project Settings → Rendering

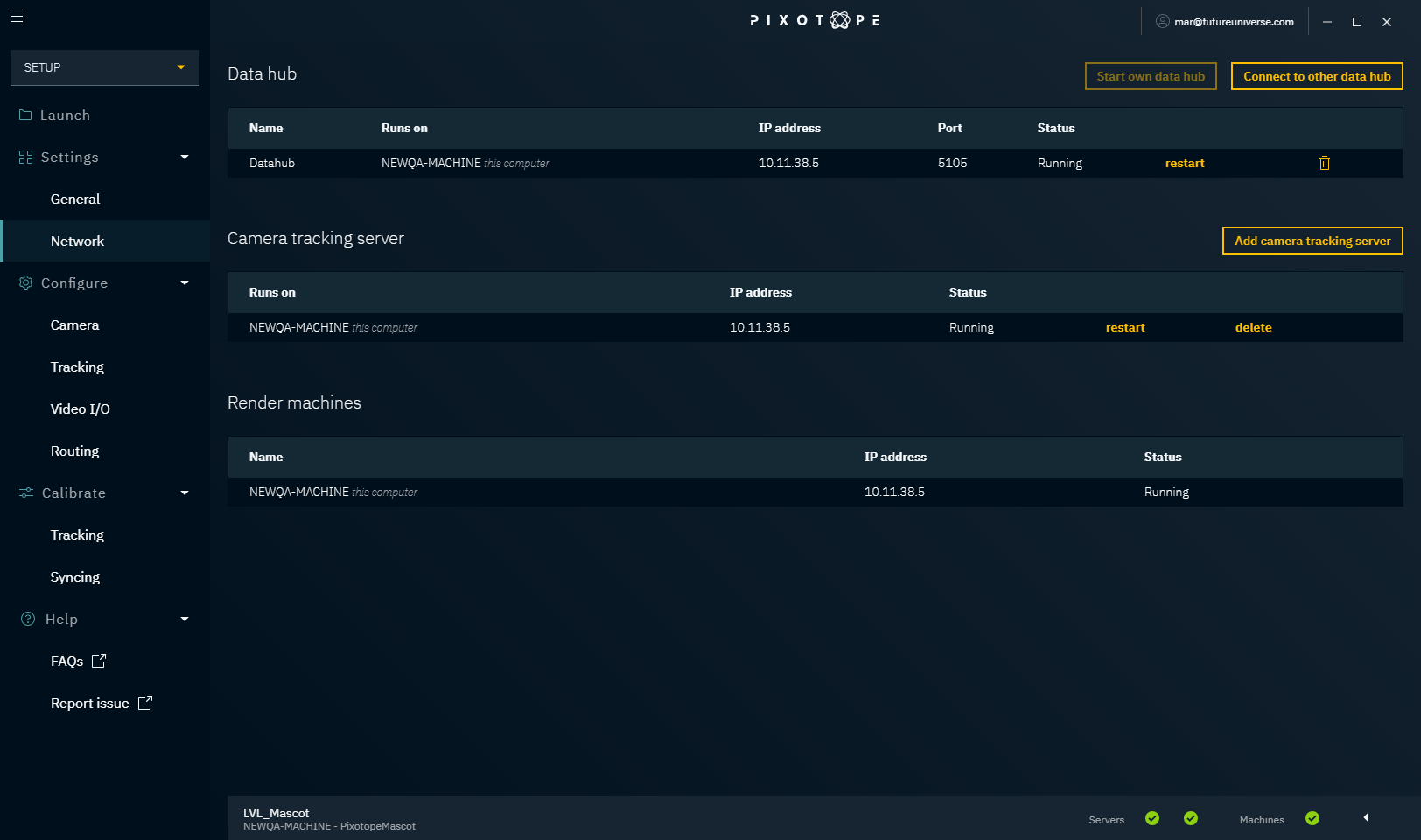

Network

The "Network" page gives you control over your network setup and allows you to check that all the servers (Data hub and Camera Tracking Server) are now up and running.

For an overview, you can use the Status bar, which is present on all pages at the bottom of the Director.

Data hub

The Data hub connects and handles all communication between the Pixotope machines of a virtual production. Each production needs just one Data hub.

Learn more about 1.2 | Single- and multi-machine setups.

Here you can:

- change who runs the Data hub

- restart the Data hub

- disconnect from a remote Data hub

- stop your local Data hub

Camera Tracking Server

The Camera Tracking Server receives and translates the camera tracking data and sends it on to the Pixotope Editor.

Here you can:

- add a Camera Tracking Server

- 1 Camera Tracking Server can be added/launched on each connected machine

- restart a Camera Tracking Server

- stop a Camera Tracking Server

Render machines

List of all machines connected to the same Data hub.

Configure

Under the "Configure" item on the main menu, there are several pages. These allow you to configure your Pixotope setup in accordance with your physical studio setup. This includes informing the system about your camera systems, camera tracking systems, SDI video inputs and outputs, video and tracking routing.

Import Setup

All configuration settings are stored per project and on each Director machine individually. You can use "Import Setup from ..." to easily import setups from other projects or other machines.

To import a setup into the current project:

- Click "Import Setup from ..." in the "Launch" panel

- Select the machine you want to import a setup from

- Select the project

- Select the camera systems and media inputs and outputs to import

- Camera systems and media inputs and outputs with the same name will be overwritten

The "Final result" dialog shows whether any problems have occurred while importing or applying the setup. If there were, please check Configure → Routing and Configure → Tracking and redo the routing.

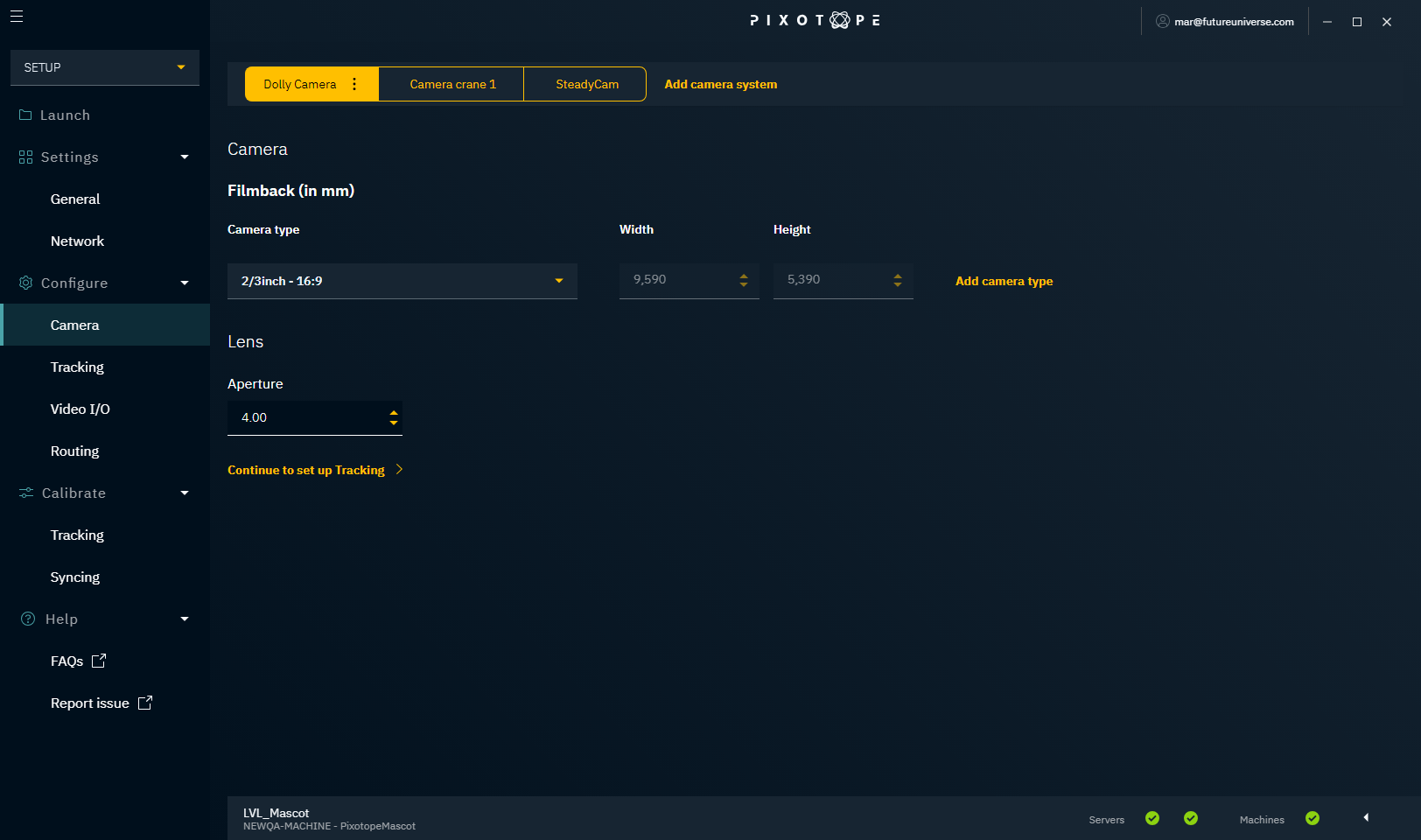

Camera

Add camera system

A camera system is a camera including its lens and a tracking system. The result is a tracked camera with various parameters tracked depending on the tracking system used.

- Click "Add camera system"

- Give it a descriptive name

- Add it

- The IP and Port you need to send the tracking data to can be viewed later in Configure → Tracking

- Choose a filmback size

- Choose from the drop-down of known cameras, or refer to the documentation of your camera and create your own

- Choose your lens aperture

- This is important when playing with depth of field later on

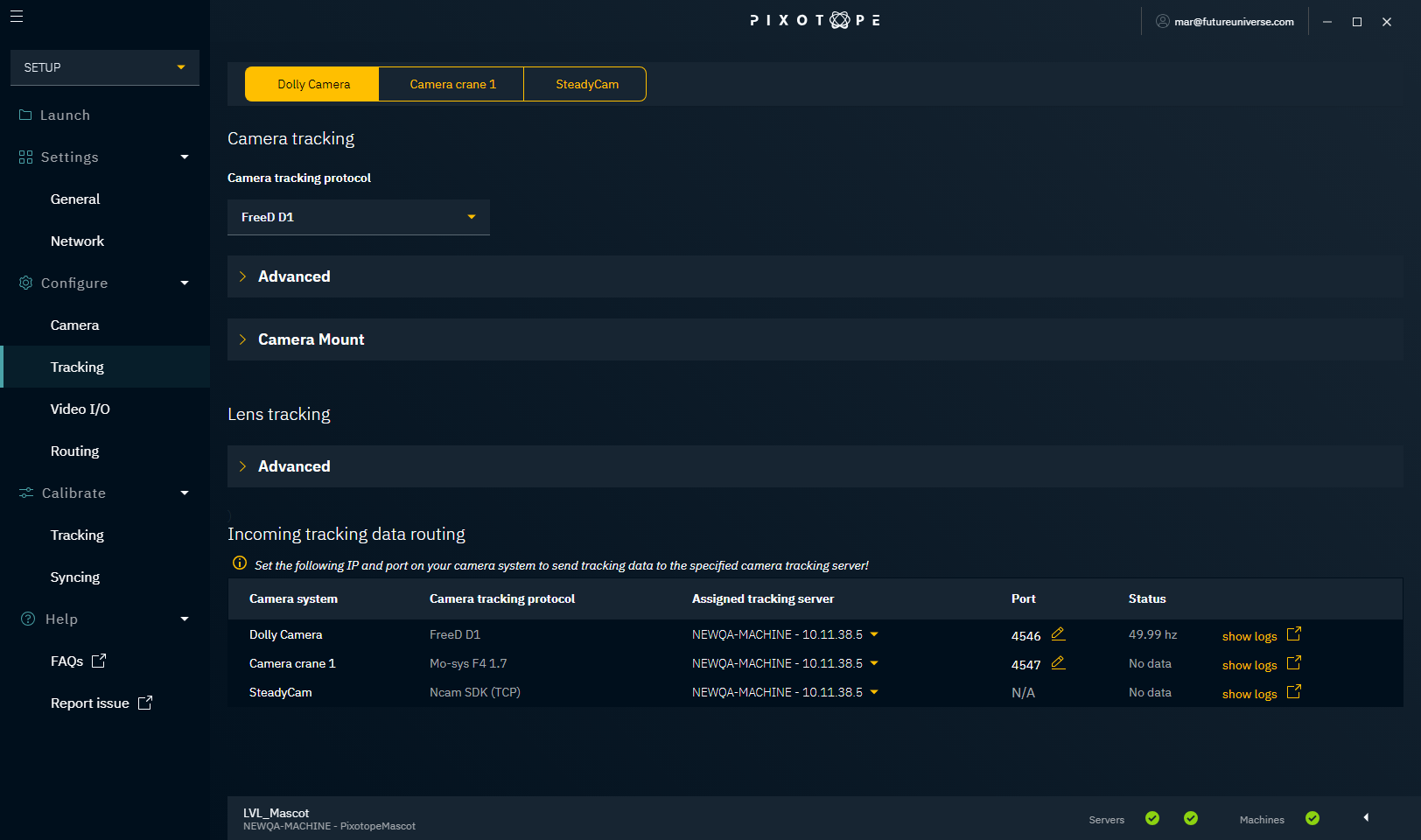

Tracking

Here you configure everything related to the camera tracking system.

Camera tracking

Camera tracking protocol

Choose the camera tracking protocol your camera tracking system uses.

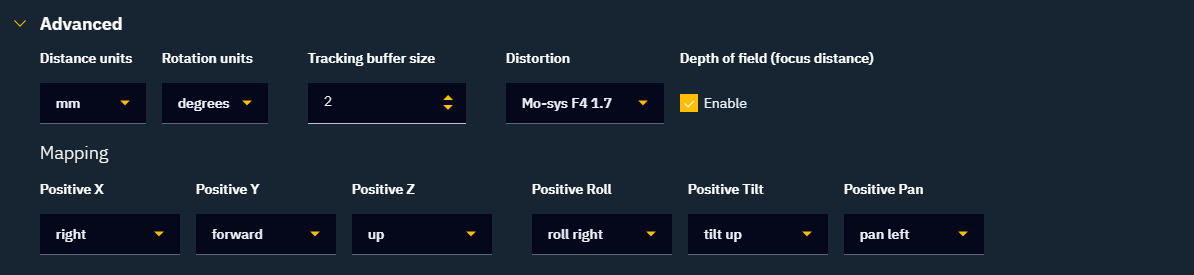

Advanced

The "Advanced" section covers protocol-specific details and how the data should be mapped. When you calibrate tracking, you might have to come back here in case the camera movement is mapped wrongly.

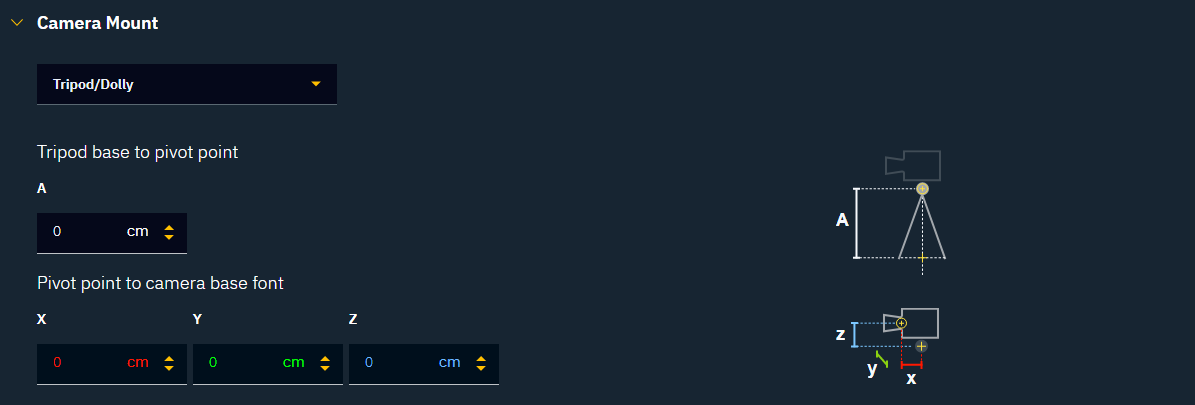

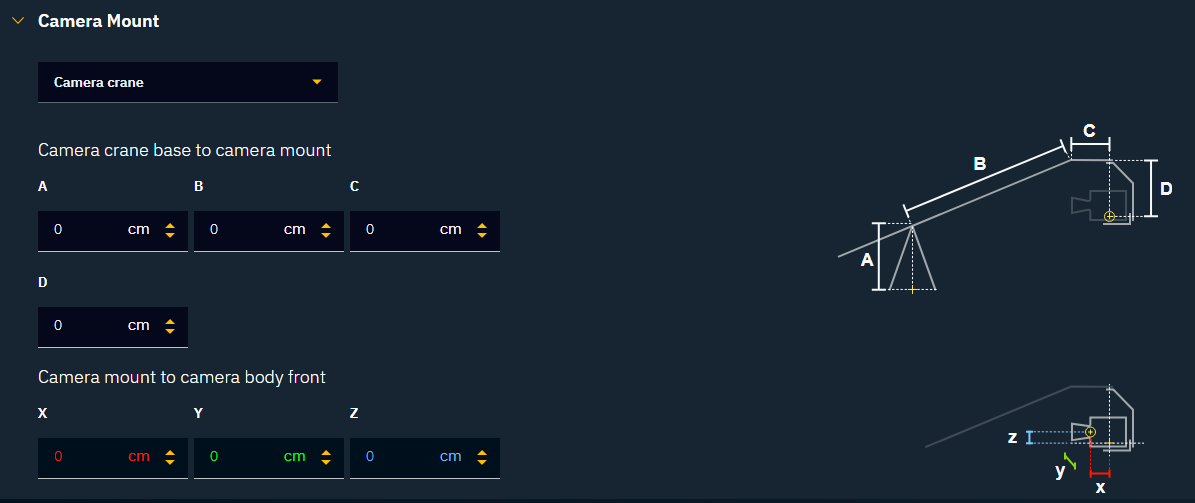

Camera Mount

Depending on the camera tracking protocols, one can choose between 3 different camera mount types:

- No mount setup

- For advanced camera tracking systems that provide correct position and rotation values out of the box

- For untracked cameras

- Tripod/Dolly

- For tripods and dolly-mounted cameras

- Camera crane

- For camera-crane-mounted cameras

The helper images provide information on the measurements needed.

Learn more about the Camera tracking server.

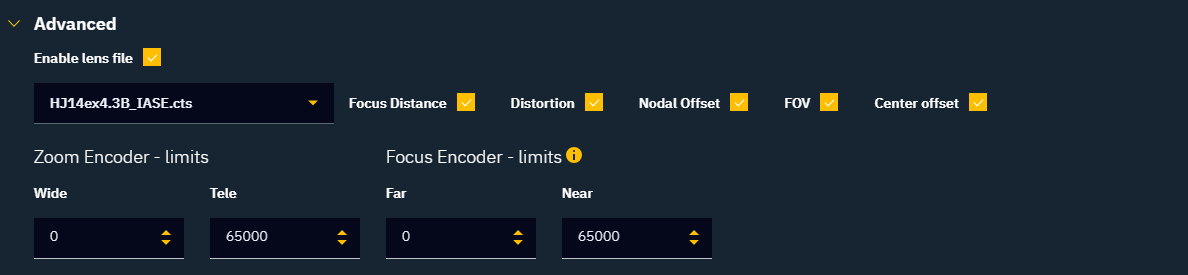

Lens tracking

Camera tracking systems most often have lens data (focus and/or zoom) integrated into their configurations. However, if you need to use a custom lens configuration, choose the lens file from the drop-down menu, and optionally edit the zoom and focus encoder limits.

Advanced

A lens file contains additional calibration data for the lenses you are using. It can include the following parameters: Focus Distance, Distortion, Nodal Offset, FOV, and Center offset. These files can be generated:

- manually by using the provided lens file template

- by our internal Lens Calibration tool

- from lens files of other manufacturers, using our internal conversion tools

Learn more about 1.2 | Lens files in Pixotope.

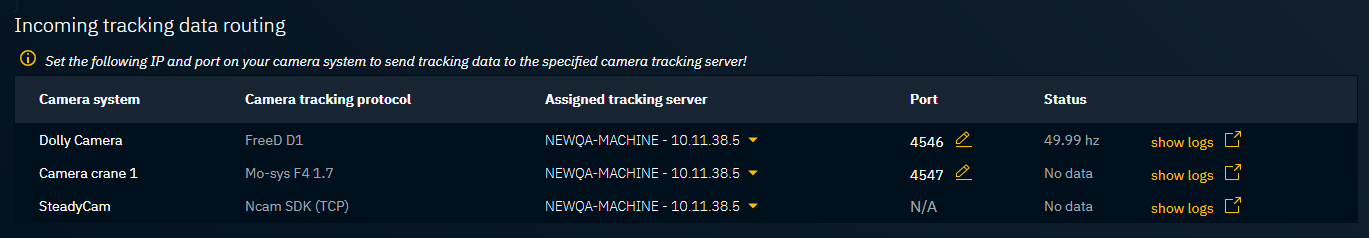

Incoming tracking data routing

- Choose which Camera Tracking Server should be assigned to which camera system

- Best practice: Run a separate Camera Tracking Server for every camera system on the machine the camera system will be routed to

- Change the port if needed

- On your tracking system, enter the IP address and port number of the assigned Camera Tracking Server

- This is where your tracking data should be sent to

- For the Ncam system, the IP address and port number should be entered in the Advanced section of the camera tracking protocol

- Check the status field

- Your tracking configuration is set up correctly if it shows incoming data

- You can also check the 1.2 | Network status in the Editor

Video I/O

Video input

- Add additional media inputs, if you need untracked video sources in your level. For example, the input for a virtual monitor

- Name your media input

- Optionally override the default settings:

- Choose the input format (resolution and frame rate) of your camera systems and media inputs

- To activate the deinterlacer, choose an input format with the "id" suffix (for example, "HD - 1080id - 50")

- Choose the color profile

- Choose a color space from the selected color profile

- Choose the transfer type

- Choose the input buffer size - this increases the stability of the input signal, but adds latency to the system

- Choose the input type:

- For a camera system - video signal with tracking data

- Internal Keyer - the video is keyed with Pixotope's internal keyer

- External Keyer Fill/Key - the video is keyed externally and comes in using a Fill and Key signal

- Input Camera - the video is used as is

- For a media input - video signal with no tracking

- Input Media - the video is used as is

- Input Media with Key - the video is keyed externally and comes in using a Fill and Key signal

- For a camera system - video signal with tracking data

- Choose the input format (resolution and frame rate) of your camera systems and media inputs

Best practice for SDR/HDR pipelines

SDR camera → SDR pipeline/display

- Compositing mode: "Video" in PRODUCTION → Composite → General

- Color management settings are ignored

- Optional: use tone mapper for 3D graphics

For advanced users

- Compositing mode: "Linear" in PRODUCTION → Composite → General

- Video Input: Choose "Rec.709 Input"

- Video Output: Choose "Rec.709 Output"

- Artistic choice: experiment with the Rec.709 alternatives

HDR camera → SDR pipeline/display

- Compositing mode: "Linear" in PRODUCTION → Composite → General

- Video Input: Choose the HDR camera's color space

- Video Output: Choose "Rec.709 Output"

- Artistic choice: experiment with the Rec.709 alternatives

HDR camera → Output follows input

- Compositing mode: "Linear" in PRODUCTION → Composite → General

- Video Input: Choose the HDR camera's color space

- Video Output: Choose the same color space as set in Video Input

The inverted version of the input color space conversion gets applied, which results in the output color space being exactly the same as the HDR camera's color space

HDR camera → HDR pipeline/display

- Compositing mode: "Linear" in PRODUCTION → Composite → General

- Video Input: Choose the HDR camera's color space

- Video Output: Choose the color space of the HDR pipeline/display

Artist with no video I/O

- Compositing mode: "Video" in PRODUCTION → Composite → General

Upcoming features

For Linear compositing mode:

- A tone mapper for 3D graphics

- A view LUT to simulate the output color space while working in the Pixotope Editor

Learn more about HDR and Color management.

ACES 1.1

Currently Pixotope ships with a version of OCIO configs for ACES 1.1: https://github.com/colour-science/OpenColorIO-Configs/tree/feature/aces-1.1-config/aces_1.1

Create custom color transforms

If you need to create custom color transforms, this online tool can create OCIO-compatible Look Up Tables (spi1d, spi3d): https://cameramanben.github.io/LUTCalc/LUTCalc/index.html

How to add custom color profiles

We currently support OpenColorIO:

- Download an OpenColorIO config folder - https://github.com/imageworks/OpenColorIO-Configs

- Place the folder in

[Your installation path]\Pixotope Editor\Engine\Plugins\Pixotope\TTMPlugin\TTMBinaries\ocio-configs

- Switch panels to update the available color profiles

Learn more about → OpenColorIO.

How to work with interlaced video

In simple AR and VS compositing scenarios where the video is not altered except for being composited, interlaced video can be used straight through. Make sure the correct video input format is set in SETUP → Configure → Video I/O (for example, "HD - 1080i - 50").

In more complex scenarios, especially in virtual set scenarios where the virtual camera, reflections and other advanced effects are used, the best results will be achieved if a progressive input signal is used. If a progressive source is not available you can use Pixotope's built-in deinterlacer.

- Go to SETUP → Configure → Video I/O

- In "Video Input Format", choose an input format with the "id" suffix (for example, "HD - 1080id - 50")

For best results, choose an interlaced video format for the output

Video output

- Add one or more media outputs

- Name your media output

- Optionally override the default settings

- Choose the output format (resolution and frame rate) of your camera systems and media inputs

- Choose the color profile

- Choose a color space from the selected color profile

- Choose the output buffer type

- Choose the output buffer size - this increases the stability of the input signal, but adds latency to the system

- Choose the output type

- Fill only - for internal compositing

- Fill and Key - for external compositing

- Learn more about Compositing

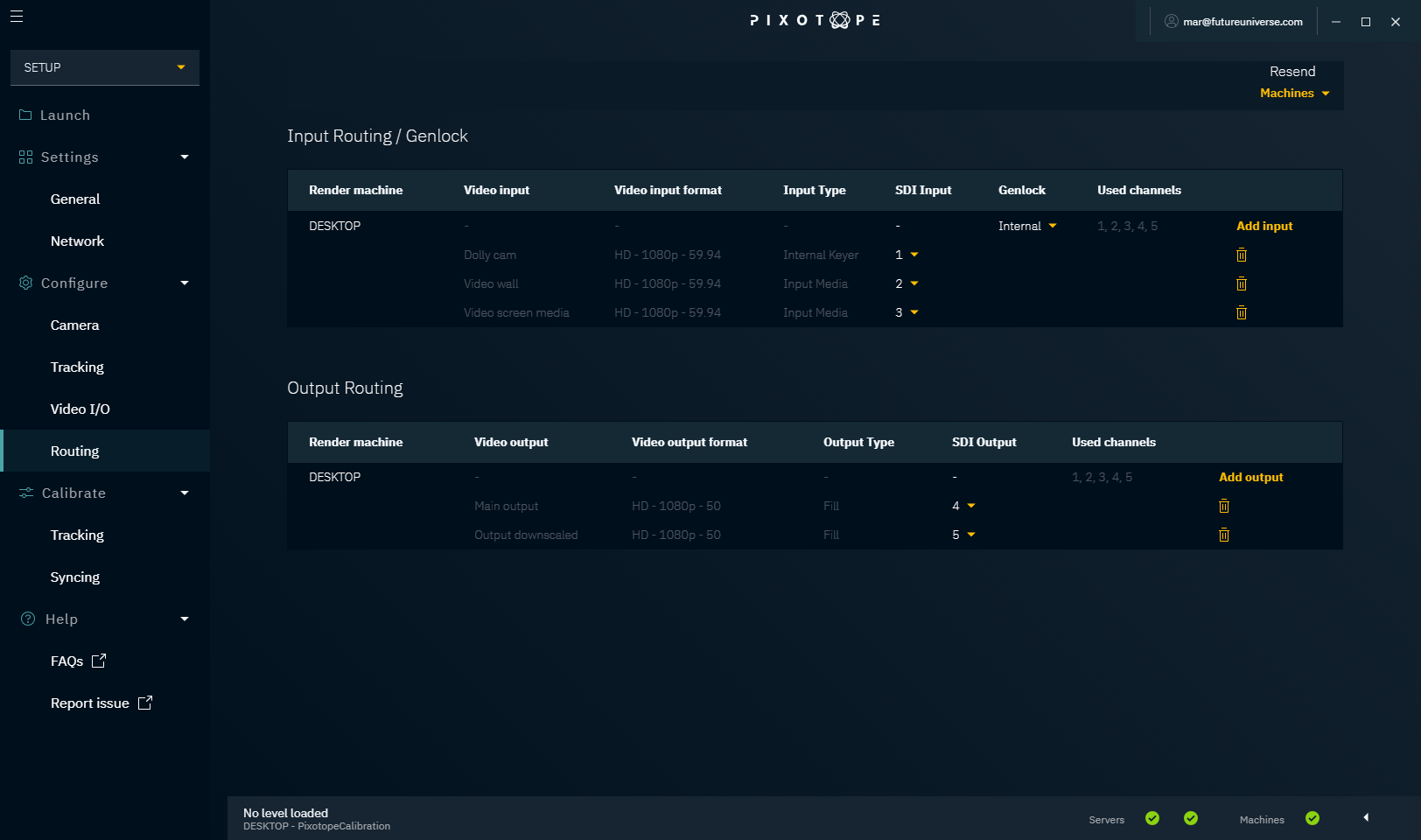

Routing

On the "Routing" page, we need to recreate the physical routing.

Input routing

Under input routing:

- Add all inputs planned for a specific machine

- Set the SDI Inputs they are wired to

Only one camera system can be added per machine.

Genlock

A genlock is used to synchronize the camera and tracking data. For each machine, choose one of the following:

- External ref - genlock comes from an external ref signal

- External SDI x - genlock is used from a specified SDI input

- Internal - an internal genlock is generated

There should be one common source for the genlock signal.

Output routing

Under output routing:

- Add all outputs planned for a specific machine

- Set the SDI Output they are wired to

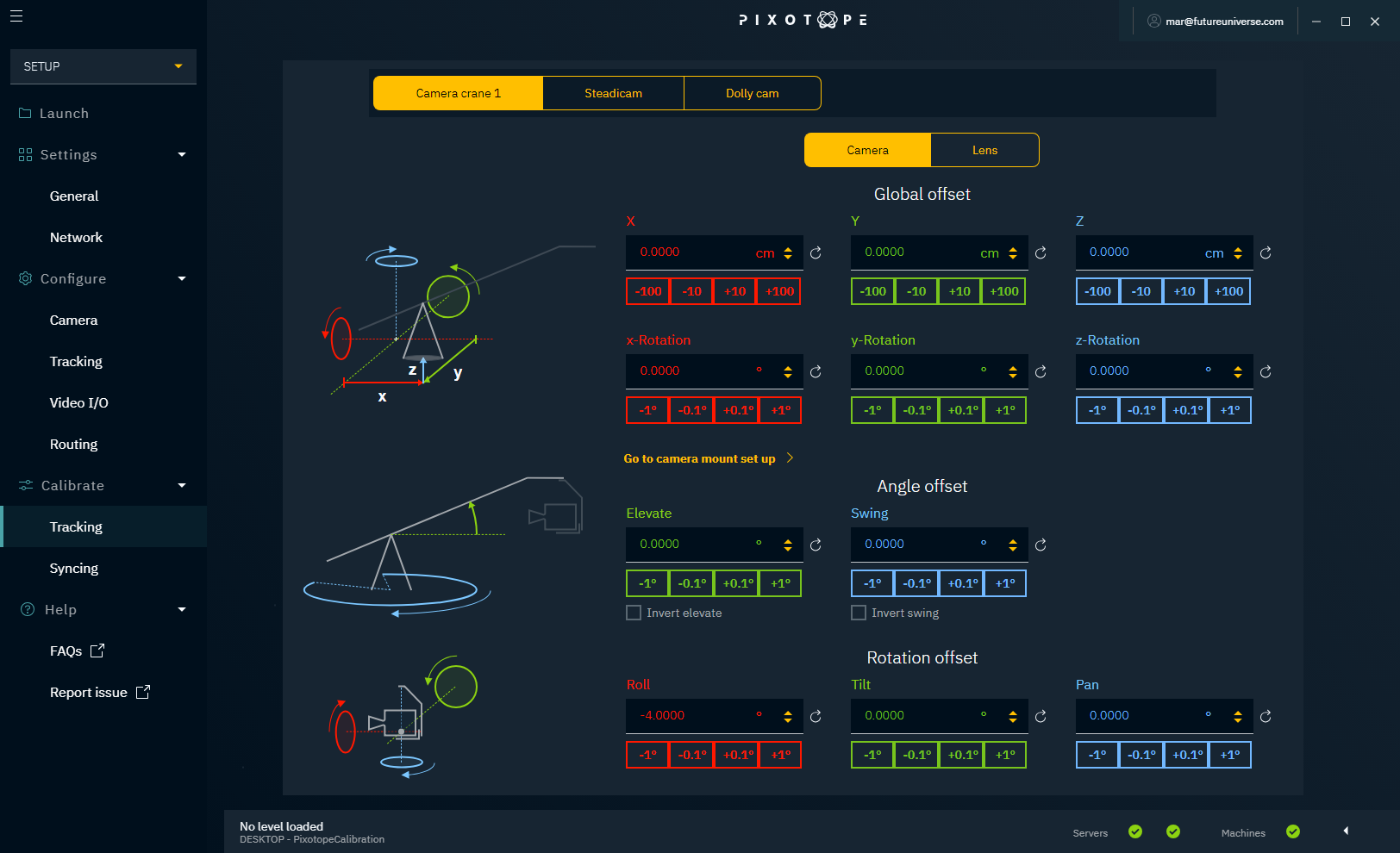

Calibrate

Under the "Calibrate" item in the main menu, there are 2 pages: "Tracking" and "Syncing".

Tracking

The tracking system is calibrated so it sends position and rotation data of the physical camera relative to the studio origin* into the Editor.

Physical camera → "TrackedCamera" in the Editor

Studio origin → "CameraRoot" in the Editor (yellow cone)

* The studio origin is a defined and marked point in your studio. It is practical to make that a point visible to the camera.

For advanced camera tracking systems

Advanced tracking systems will already give you position and rotation relative to a defined studio origin. For these systems, we suggest doing the tracking calibration on their end.

If you need technical support in calibrating the tracking system, please contact the vendor of your camera tracking system.

For simple camera tracking systems

Simple tracking systems provide only parts of the data, for example only rotation data. In this case, use the tracking panel to manually offset the tracking data so that you end up getting position and rotation data relative to the studio origin. This data offset is applied in the Camera Tracking Server.

Camera

Depending on the selected camera mount, different offset options are provided:

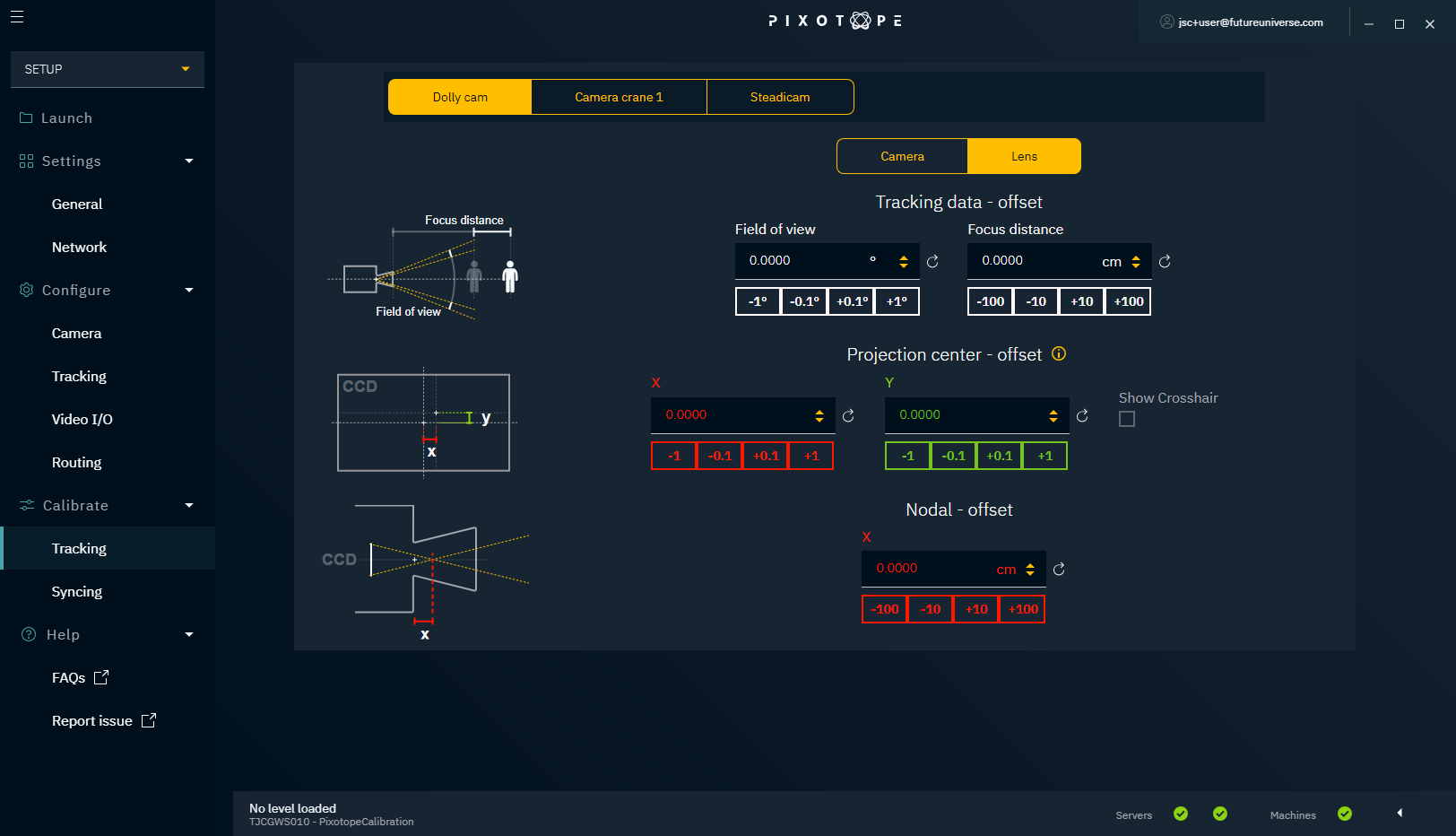

Lens

Check camera tracking

- Define and mark your studio origin

- In the Editor: check that "CameraRoot" is 0,0,0

- In the Editor: place a calibration cone at the CameraRoot

- The marked point on stage and the calibration cone should match up

- Move the camera to the outer sides of the field of view and see whether the marked point and calibration cone still match up (disregard time slipping)

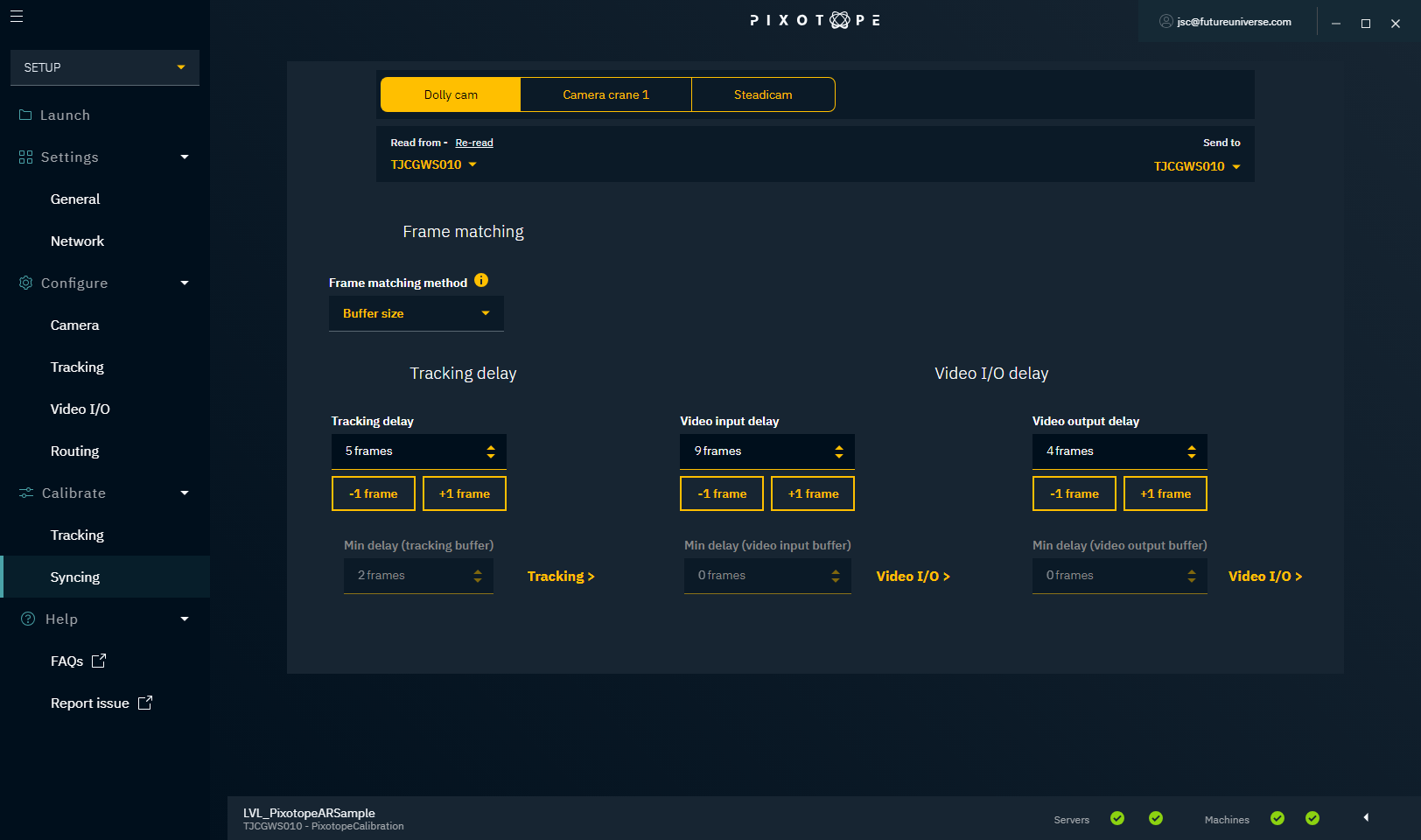

Syncing

Depending on the video pipeline, you can end up with delayed video or tracking data. To avoid 3D images slipping when the camera is moved, we need to synchronize video data and tracking data.

Check syncing

- Pan the physical camera quickly and stop abruptly

- Check to see whether the calibration cone slips temporarily from the marked point

- If the graphic moves first:

- Subtract video input delay

- If that is not possible, then add tracking delay

- If the camera feed moves first:

- Subtract tracking delay

- If that is not possible, then add video input delay

- If the graphic moves first:

Frame matching methods

Buffer size (no frame matching)

This ensures a stable buffer size by deleting overflow, and by duplicating packets when the buffer size is too low.

- Requires manual syncing

- Will cause stuttering when tracking data is unstable

Frame counter (relative frame matching)

The renderer ensures a stable timing difference between video and tracking by looking at the video frame counter.

- Requires manual syncing

- Needs video and tracking packets to have the same frame rate

Timecode (automatic frame matching)

Video and tracking get a generated timecode for every frame. The video frame is matched with the tracking packet with the same timecode.

- Both video and tracking must have the timecode from the same source (not supported by all tracking protocols)

Tracking delay

This setting allows you to add a delay to the tracking data. The minimum delay is determined by the tracking buffer size, configured in Configure → Tracking → Camera tracking → Advanced.

Video input delay

This setting allows you to add a delay to the video input. The minimum delay is determined by the input buffer size, configured in Configure → Video I/O → Video Input.

Video output delay

This setting allows you to add a delay to the video output. The minimum delay is determined by the output buffer size, configured in Configure → Video I/O → Video Input.

Creating your first project

Continue to 1.2 | Setting up a Pixotope project.